The Just-in-Time/Lean approach transformed how products were made all over the world.

But like any system, it wasn’t perfect. It was just another application of even more fundamental principles, suited to a particular time and place. Even as it was being developed, its flaws started to become apparent.

The biggest problem with Just-in-Time/Lean manufacturing was that it demanded stability and predictability in both the internal (production) environment and external (market) environment.

Internally, every factor that could possibly affect variability – the sworn enemy of high-precision production – had to be tightly controlled to multiple decimal places.

This explains the dirty secret of Lean: it takes incredibly long to implement and iron out all the kinks. Nine months per production line was the (rather optimistic) recommendation of the Toyota Supplier Support Center at the time. In reality, as long as 10 years was sometimes required to implement the system across a company.

In the external environment the demand for predictability was, of course, even more problematic. Despite Toyota’s relatively stable flow of orders, it had to establish a mode of receiving orders (and promising deliveries) that restricted the change in orders from one month to the next.

Most companies are not able to enforce such favorable terms on their suppliers and customers. And worst of all, this source of instability was not something within the power of production to control. It came from the way products were marketed and sold, not produced.

This is the situation into which an obscure Israeli physicist named Eliyahu Goldratt stepped in 1984, with the publication of his book The Goal. Goldratt in many ways played the same role as Ohno decades earlier, translating the fundamental principles of flow to a whole new paradigm.

What Goldratt realized was that the most effective way to limit work-in-process was not physical limits (belts and cards), but time. He proposed restricting work-in-process not by fussing about with lines and containers and parts, but by controlling the timing of when new work-in-process was released into production.

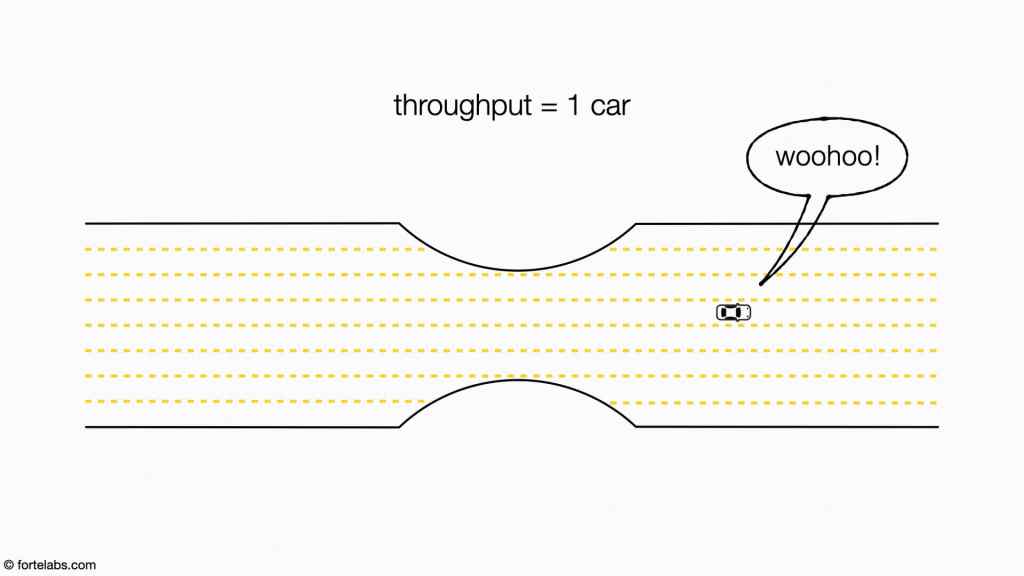

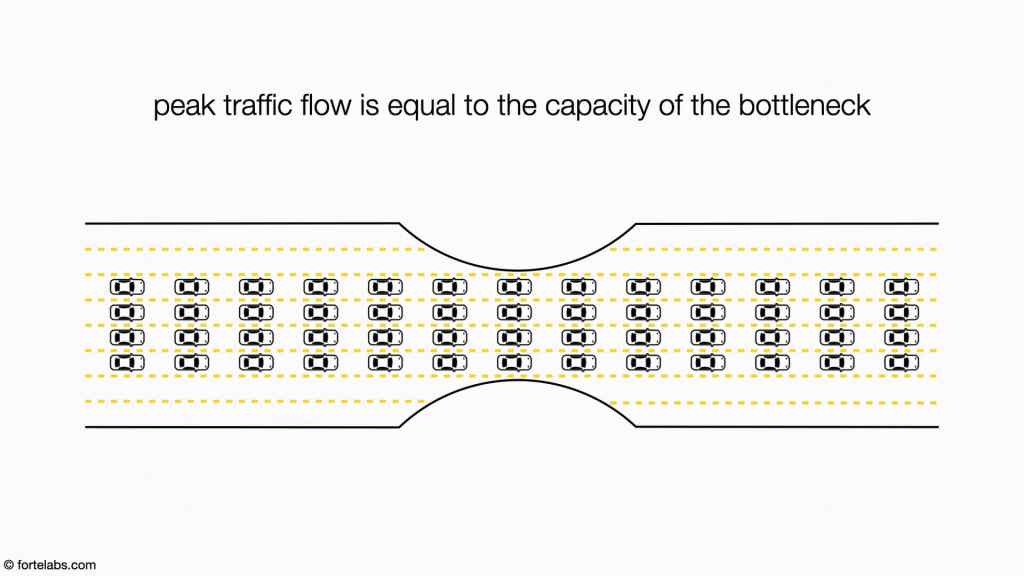

Let’s use an analogy to understand why this approach was so revolutionary: imagine a busy freeway whose flow we’re trying to maximize. If you wake up at 5am, you might be the first car on the freeway. With a throughput of just one car, there’s nothing standing in your way and you can drive as fast as you want.

For awhile, as you add each additional car, throughput increases, since all the cars can drive at the same speed without encountering any obstacles or slowing each other down.

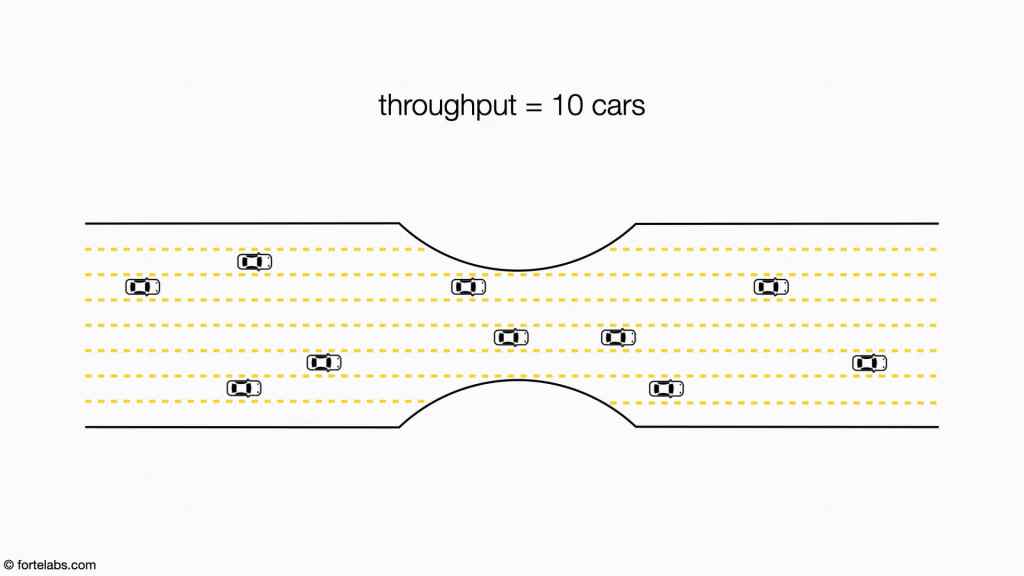

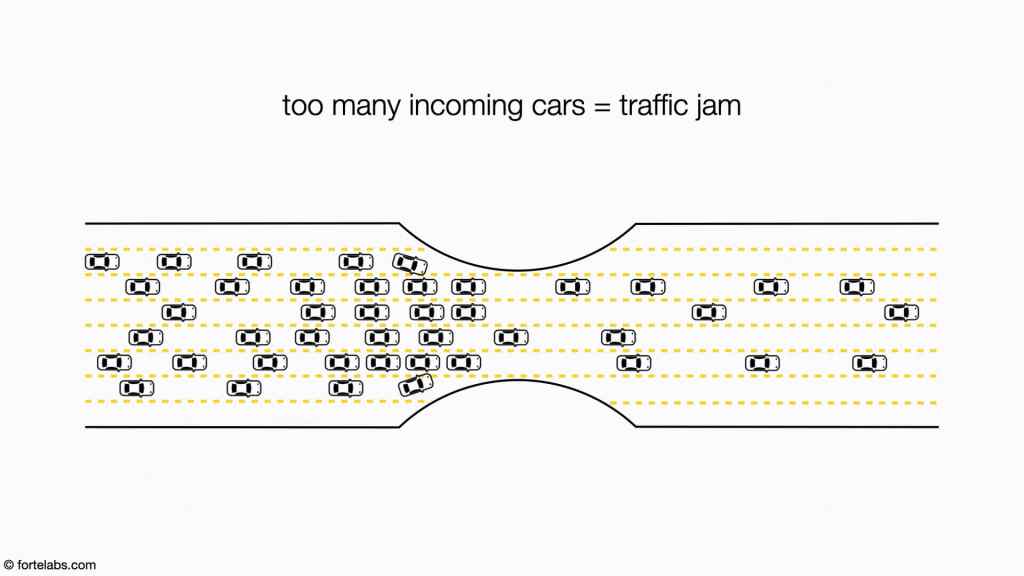

But this only works up to a point. Eventually, the increasing number of cars will start “interacting” – they will run out of space to drive as fast as they want and begin bunching up behind each other.

Throughput will peak, and then start dropping. Perhaps you’ve noticed this tipping point while driving on the freeway — one minute all the cars are evenly spaced and flowing at a good pace, and then out of nowhere, an additional few throw the system into disequilibrium, and traffic grinds to a halt.

Our question is, “What is the optimal number of cars to allow onto the freeway to achieve peak traffic flow?”

The answer is obvious when seen from above like this: peak traffic flow is equal to the capacity of the bottleneck.

In other words, the optimal number of cars is however many can continuously flow through the narrowest, slowest section of freeway. This might seem like a terrible waste. What about all the empty lanes (“unused capacity”) clearly visible at the sides? Wouldn’t it be far more efficient to fill every lane with as many cars as possible?

However, remember the lesson we learned about the dysfunctional company?

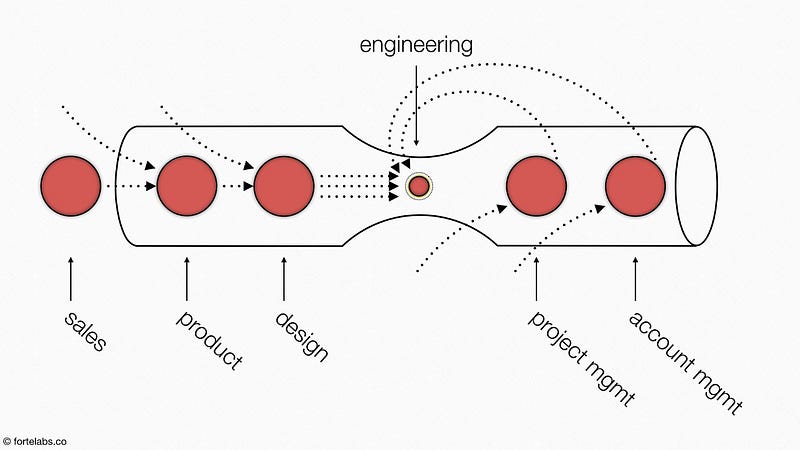

You’ll recall that the red indicates everyone in the company is overloaded and overworked. The more work everyone sends to Engineering (which is the bottleneck in this organization), the less time the engineers have to actually get anything done. The less they produce, the more new projects everyone else starts “in the meantime,” thus sending even more work to the bottleneck in a vicious cycle with no end in sight.

It was obvious in this case what management needed to do to fix this situation: maintain excess capacity. Instead of having everyone “stay busy” all the time for fear of reprimand, they should not just allow but require every department (except Engineering) to purposefully not use all their productive bandwidth, and keep some in reserve.

How can that be? How in the world can not fully utilizing every minute of your day be the most productive thing you can do? Because it is only by holding back everything they could be starting that the other departments can protect Engineering and its scarce bandwidth.

To be clear, this requires a lot of trust. It is a relationship of mutual interdependence. Some people in Marketing, Product, Operations, and Sales may have very good reasons for wanting to stay as busy as possible. Their reputation, self-respect, and even promotions and raises may depend on their manager’s perception of their individual contribution.

Yet one individual can never accomplish anywhere close to as much as an entire organization rowing together in the same direction at the same pace. As we’ve seen, you have to make a choice: either to optimize at the level of individuals, or at the level of the whole group. You can’t choose both, because they lead to very different decisions and tradeoffs.

Don’t fill the time of every person in the company. Don’t use every stretch of lane on the freeway. It is only the capacity of the bottleneck that matters. Protect it at all costs.

Follow us for the latest updates and insights around productivity and Building a Second Brain on Twitter, Facebook, Instagram, LinkedIn, and YouTube. And if you're ready to start building your Second Brain, get the book and learn the proven method to organize your digital life and unlock your creative potential.

- POSTED IN: Flow